Unlike a lot of arguments and theories in SEO, the debate of click-depth from prominent pages of a website is relatively black and white in terms of what Google have publicly said on the matter.

Why This Matters

When Google crawls a website, unless its very small and relatively authoritative, it doesn’t get crawled in it’s entirety.

We also know from looking at server logs, for multiple websites big and small, that Google never forgets a URI path – and can even crawl URIs that have returned a 301 for 6 years.

It’s also worth noting that Googlebot doesn’t always enter through the homepage, meaning it needs to be able to navigate between pages on the website – and find internal links to important pages easily.

The initial schools of thought focused around site elements like URL structure and subfolder depth, however Google’s John Mueller clarified this on Twitter:

It’s more a matter of how many links you have to click through to actually get to that content rather than what the URL structure itself looks like.

You can find the full explanation from the Webmaster Hangout here.

During the hangout, John also said the follow in regards to click-depth:

What does matter for us a little bit is how easy it is to actually find the content there. So especially, if your home page is generally the strongest page on your website, and from the home page it takes multiple clicks to actually get to one of these stores, then that makes it a lot harder for us to understand that these stores are actually pretty important.

And:

On the other hand, if it’s one click from the home page to one of these stores, then that tells us that these stores are probably pretty relevant and that, probably, we should be giving them a little bit of weight in the search results as well.

And further clarified during a live Q&A I held with him, that Google doesn’t read keywords in URL structures, and that they don’t read slashes in the URL, so there’s very little difference between /service and /service-category/service.

The important takeaways from this statement are that Google have described how much this matters to them as “a little bit”, and something else that I oftentimes see being mistaken when you audit click-depth, and that’s the notion that it’s always the click-depth from the homepage.

As John phrased it, “if your home page is generally the strongest page on your website“. On a lot of websites, this might not necessarily be the case – some websites may have a number of strong pages and depending on the content, and relevancy of other pages, link/click depth will matter from these also.

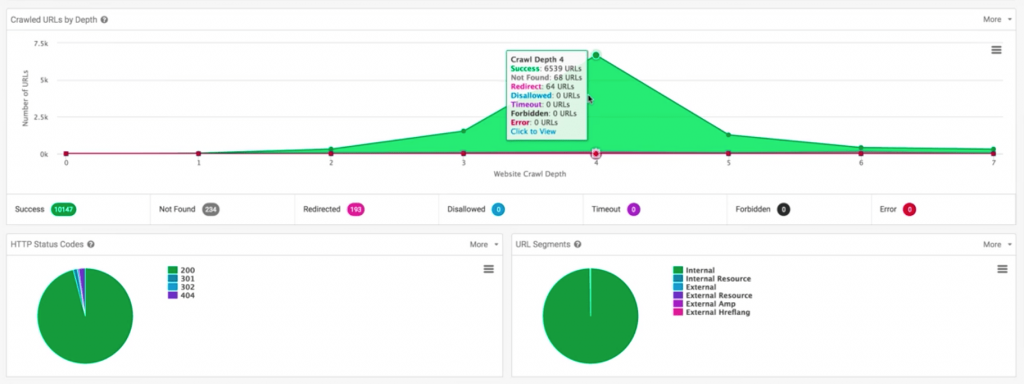

When we run tools, such as Sitebulb, to analyse our websites we nigh on always enter the website root domain, which 99.9% of the time will be “the homepage”.

So when we look at this crawl depth, we’re doing so from root domain, and it’s also very important to combine click-depth reporting with internal link reporting too, as a click-depth of three might not be an issue depending on internal links velocity (in comparison to other pages on the website).

So how can we optimise for, and put the websites in the best position possible to ease click-depth for Google?

Optimizing For Click-Depth

In order to optimize for click-depth, we need to understand the relationship between crawl-depth and SEO performance.

Simply put, research has shown that as crawl depth increases from higher authority pages on a website the frequency in which they are crawled diminishes, leading to a correlation between organic search performance and crawl frequency.

By the same token, over optimizing and introducing high volumes of unnatural links with over-optimized anchor texts can have a negative impact.

Improving this metric, or score, or however you want to quantitatively record the results of your crawl-depth optimization ultimately comes down to the website you’re working on, it’s structure (centralized or decentralized), and how internal linking can naturally pass between pages in a logical (UX logical) manner, and not just linked for the sake of linking.

On a top-level, elements we can look at improving are:

- Implementing linking nests between pages that support each other around a common topic

- Reducing potential crawl traps and endless crawl spirals

- Reducing excessive structural linking, e.g. excessively linked pagination and hygiene pages

- Introduce HTML sitemaps at both macro and micro levels to create further internal linking nests

- Create centralized linking hubs for topics

- Improve site response times to reduce inefficiencies within Google’s crawling process

- Implement useful breadcrumbs

- Implement secondary navigations

Examples for some of the above practices can be seen out in the wild, for example:

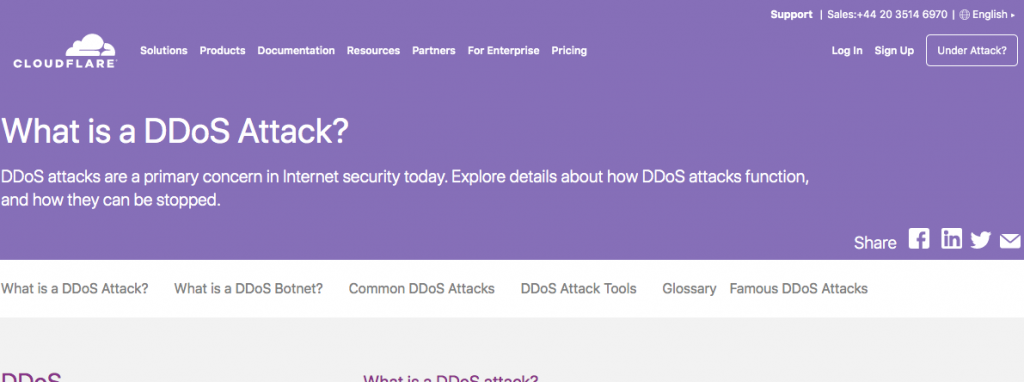

Secondary Navigation Element – Cloudflare, Learning Center

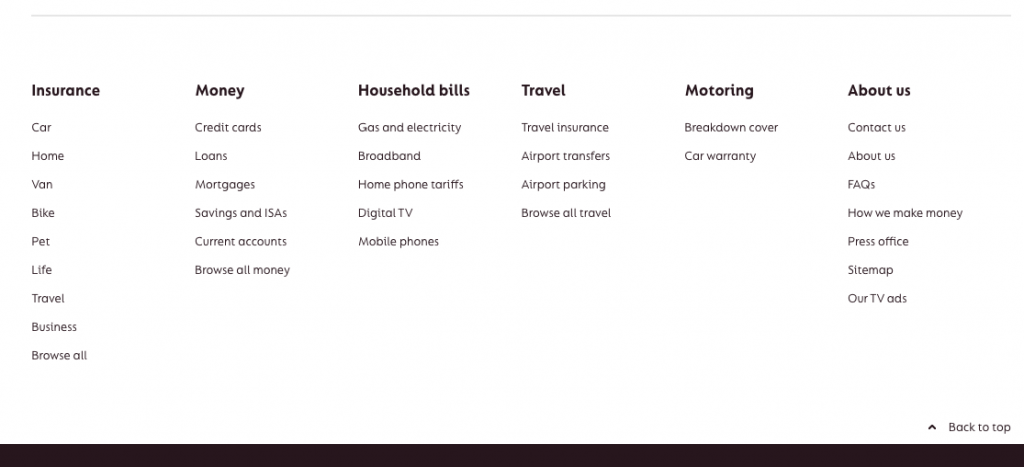

Micro HTML Sitemap, GoCompare Homepage

Macro HTML Sitemap, Money Supermarket Homepage

These are just three good examples of how you can combine strong internal linking, with user-focused page designs.